As the debate rages in California and other places about the utility of the bar exam, it's become fairly clear that a number of separate but interrelated debates have been conflated. There are at least four debates that have been raging, and each requires a different line of thoughts--even if they are all ostensibly about the bar exam.

First, there is the problem of the lack of access to affordable legal representation. Such a lack of access, some argue, should weigh in favor of changing standards for admission to the bar, specifically regarding the bar exam. But I think the bar exam is only a piece of this debate, and perhaps, in light of the problem, a relatively small piece. Solutions such as implementing the Uniform Bar Exam; offering reciprocity for attorneys admitted in other states; reducing the costs to practice law, such as lowering the bar exam or annual licensing fees; finding ways to make law school more affordable; or opening up opportunities for non-attorneys to practice limited law, as states like Washington have done should all be matters of consideration in a deeper and wider inquiry. (Indeed, California's simple decision to reduce the length of the bar exam from three days to two appears to have incentivized prospective attorneys to take the bar exam: July 2017 test-takers are up significantly year-over-year, most of that not attributable to repeat test-takers.)

Second, there is the problem of whether the bar exam adequately evaluates the traits necessary to determine whether prospective attorneys are minimally competent to practice. It might be the case that requiring students to memorize large areas of substantive law and evaluating their performance on a multiple-choice and essay test is not an ideal way for the State Bar to operate. Some have pointed to a recent program in New Hampshire to evaluate prospective attorneys on a portfolio of work developed in law school rather than the bar exam standing alone. Others point to Wisconsin's "diploma privilege," where graduates of the University of Wisconsin and Marquette University are automatically admitted to the bar. An overhaul of admissions to the bar generally, however, is a project that requires a much larger set of considerations. Indeed, it is not clear to me that debates over things like changing the cut score, implementing the UBE, and the like are even really related to this issue. (That said, I do understand those who question the validity of the bar exam to suggest that if it's doing a poor job of separating competent from incompetent attorneys, then it ought to have little weight and, therefore, the cut score should be relatively low to minimize its impact among likely-competent attorneys who may fail.)

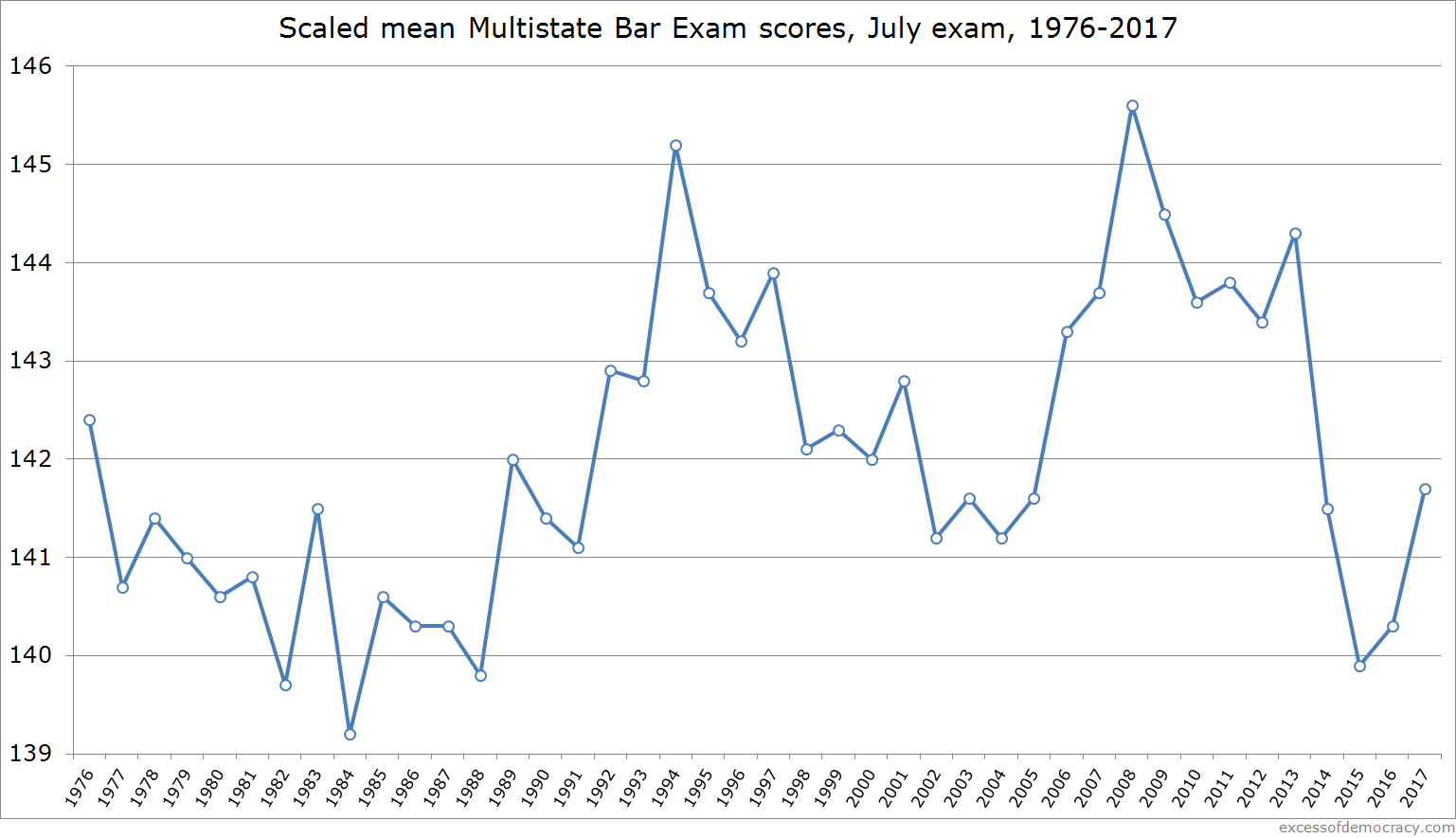

Third, there is the problem of why bar exam passing rates have dropped dramatically. This is an issue of causation, one that has not yet been entirely answered. It is not because the test has become harder, but some have pointed to incidents like ExamSoft or the addition of Civil Procedure as factors that may have contributed to the decline. A preliminary inquiry from the California State Bar, examining the decline just in California, identified that a part of the reason for the decline in bar passage scores has been the decline in the quality of the composition of the test-takers. I became convinced that was the bulk of the explanation nationally, too. An additional study in California is underway to examine this effect with more granular school-specific data. If the cause is primarily a decline in test-taker quality and ability, then lowering the cut score would likely change the quality and ability of the pool of available attorneys. But if the cause is attributable to other causes, such as changes in study habits or test-taker expectations, then lowering the cut score may have less of such an impact. (Indeed, it appears that higher-quality students are making their way through law schools now.) Without a thorough attribution of cause, it is difficult to identify what the solution ought to be to this problem.

Fourth, there is the debate over what the cut score ought to be for the bar exam. I confess that I don't know what the "right" cut score is--Wisconsin's 129, Delaware's 145, something in between, or something different altogether. I'm persuaded that pieces of evidence, like California's standard-setting study, may support keeping California's score roughly in place. But it is just one component of many. And, of course, California's high cut score means that test-takers fail at higher rates despite being more capable than most test-takers nationally. Part of that is, I'm not sure I fully appreciate all the competing costs and benefits that come along with changes in the cut score. While my colleague Rob Anderson and I find that lower bar scores are correlated with higher career discipline rates, facts like these can only take one so far in evaluating the "right" cut score. Risk tolerance and cost-benefit analysis have to do the real work.

(I'll pause here to note the most amusing part of critiques of Rob's and my paper. We make a few claims: lower bar scores are correlated with higher career discipline rates; lowering the cut score will increase the number of attorneys subject to higher career discipline rates; the state bar has the data to evaluate with greater precision the magnitude of the effect. No one has yet disputed any of these claims. We don't purport to defend California's cut score, or defend a higher or lower score. Indeed, our paper expressly disclaims such claims! Nevertheless, we've faced sustained criticism for a lot of things our paper doesn't do--which I suppose shouldn't be surprising given the sensitivity of the topic for so many.)

There are some productive discussions on this front. Professor Joan Howarth, for example, has suggested that states consider a uniform cut score. Jurisdictions could aggregate data and resources to develop a standard that they believe best accurately reflects minimum competence--without the idiosyncratic preferences of this state-by-state process. Such an examination is worth serious consideration.

It's worth noting that state bars have done a relatively poor job of evaluating the cut scores. Few evaluate them much at all, as California's lack of scrutiny for decades demonstrates. (That said, the State Bar is now required to undertake an examination of the bar exam's validity at least once every seven years.) States have been adjusting, and sometimes readjusting, the scores with little explanation.

Consider that just in 2017 alone, Connecticut is raising its cut score from 132 to 133, Oregon is lowering it from 142 to 137, Idaho from 140 to 136, and Nevada from 140 to 138. Some states have undergone multiple revisions in a few years. Montana, for instance, raised its cut score from 130 to 135 for fear it was too low, then lowered it to 133 for fear it was too high. Illinois planned on raising its cut score from 132 in 2014 to 136 in 2016, then, after raising the score to 133, delayed implementing the 136 cut score until 2017, and delayed again in 2017 "until further order." Certainly, state bars could benefit from more, and better, research.

Complicating these inquiries are mixed motives of many parties. My own biases and priors are deeply conflicted. At times, I find myself distrustful of any state licensing systems that restrict competition, and wonder whether the bar exam is very effective at all, given the closed-book memory-focused nature of the test. I worry when many of my students who'd make excellent attorneys fail the bar, in California and elsewhere. At other times, I find myself persuaded by studies concerning the validity of the test (given its high correlation to law school grades, which, I think, as a law professor, are often, but not always, good indicators of future success), and by the fact that, if there's going to be a licensing system in place, then it ought to try to be as good as it can be given its flaws and all.

At times, though, I realize these thoughts are often in tension because they are sometimes addressing different debates about the bar exam generally--maybe I'd want a different bar exam, but if we're going to have one it's not doing such a bad job; maybe we want to expand access to attorneys, but the bar exam is hardly the most significant barrier to access; and so on. And maybe even here, my biases and priors color my judgment, and with more information I'd reach a different conclusion.

In all, I don't envy the task of the California Supreme Court, or of other state bar exam authorities, during a turbulent time for legal education in addressing the "right" cut score for the bar exam. The aggressive, often vitriolic, rhetoric from a number of individuals concerning our study in discipline rates is, I'm sure, just a taste of what state bars are experiencing. But I do hope they are able to set aside the lobbying of law deans and the protectionist demands of state bar members to think carefully and critically, with precision, about the issues as they come.